SSDs have become a wildly mainstream component in our day-to-day computing tasks.

That said, there’s still a lot to know about them for the novice user.

Here, we’ll take a look at SSD cache, what it is, what it does, and whether you might find it useful.

Here’s the Rundown on Whether SSDs Have Cache

SSDs have conventionally always been equipped with a DRAM component. The DRAM is where the SSD caches the data stored on it. The cache is essential to quickly access the SSDs resources as soon as a task requests them. Recent times have, however, seen the rise of DRAM-less SSDs.

Table of Contents

What is SSD Cache?

Before diving further, a short review of the term ‘SSD cache’ is in order.

A cache, in this instance, refers to a hardware or software storage location on your computer, which is responsible for holding the most recent and frequently accessed data and programs.

This allows for faster retrieval of these resources whenever they’re next needed.

With that said, it then becomes easy to see what SSD caching entails.

It simply means to use an SSD as a cache point for your system’s main storage and the reason is fairly straightforward.

It’s because SSDs have faster memory chips (flash memory chips) and technology. As a result, SSD caching is also known as flash caching.

This all seems like quite a daunting concept, but it is a simple action that provides some merits for your system.

The easiest to spot is the fact that response and access times to programs and other resources on your computer improve.

This is down to the fact that SSDs as a standalone component, have vastly superior reading and writing speeds compared to conventional HDDs.

Therefore, using them as a cache point for your computer makes everything conveniently fast. This idea although quite novel to some, has actually been around for quite a while.

Many computer heavy users have always employed this method to improve their working speeds.

SSD caching allows for faster access and retrieval of programs and other resources from the main HDD.

How Does SSD Cache Work?

Having understood what SSD caching is, let’s now take a look at the next point about it. How does it work?

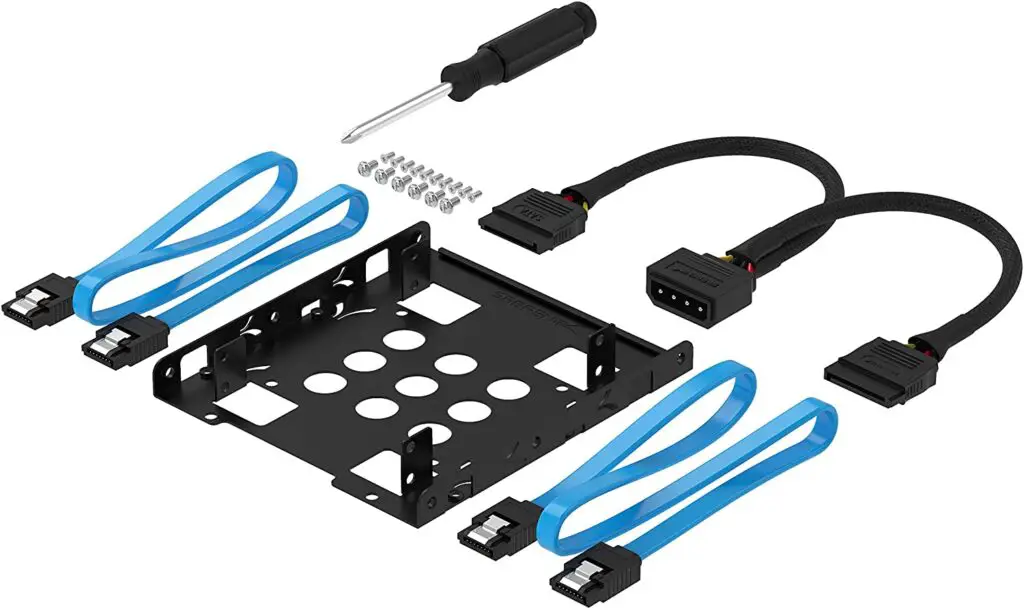

First, let’s see what it takes to set up an SSD cache. The process typically involves a few steps:

- Connect the SSD to your system.

- Using inbuilt or specialized 3rd party software, designate a portion of the available SSD space to be used as cache. (If you don’t end up using the whole drive for caching, the remaining space can be used for normal storage)

- Then select the drive(s)/volumes on your computer system that you want to have sped up/associated with the caching process.

Next, let’s take a little step back and remember what a ‘cache’ is.

It is a software or hardware component that is responsible for storing the most frequently accessed data and programs.

This is an important feature of any system and as such, many if not all come with their own primary caches.

SSD caching is a secondary addition to supplement the caching needs of your system. This is an important point to remember to help understand how differently they work.

In a typical scenario, when a computer’s CPU needs to perform a task, it first scans the DRAM cache — DRAM cache is one of the primary caches on your computer.

Should the data or program not be located, it checks other primary caches before having to retrieve whatever it needs directly from the HDD.

With the introduction of SSD caching to your system, this process changes a bit.

If the resource requested isn’t found in the DRAM cache, the CPU will next scan the SSD cache for it.

If the system manager had earlier determined it to be an important resource, it will be available from the SSD cache. It will therefore be more easily and quickly retrieved.

SSD caching puts the most ‘cache-worthy’ resources on board in a more literal sense.

Do All SSD Have Cache?

SSD drives are made up of several memory cells. This means different data are stored on different parts of the SSD.

That being said, whenever a read/write operation is requested of the SSD, it has to know where the exact data needed is located.

Because of this, the SSD creates a method of easily pointing out where said data is.

If one was to visualize it, picture a map. All the locations are nice and easy to spot and their roads are well shown.

This ‘map’ of the SSD is then stored on the SSD’s DRAM cache. Given this crucial role, DRAM cache has always been a staple feature of SSDs.

Newer trends have however seen D-RAM-less SSDs come out. They are cheaper to buy of course given this omission.

The cost, however, as many would argue, is that it denies you what one would term ‘a true SSD experience’.

This is because the absence of DRAM makes them slower than conventional SSDs.

Do SSD Need Cache?

On an individual level, recall that SSDs are standalone storage devices on their own.

They were made to compete and possibly make obsolete the conventional HDDs that were traditionally being used.

Current commonly used HDDs actually have a default storage allocation for caching. For them, this is an absolutely necessary feature if they are to hope to attain a faster read/write time.

SSDs are made using faster memory cells (flash memory chips) and, as such, have quicker reading and writing times. This simple advantage over conventional HDDs renders their need for a cache unimportant.

That being said, however, conventionally, SSDs still come shipped with a cache system in their volatile memory (Examples of volatile memory are SDRAM and SRAM).

When out selecting a new SSD for whatever needs you may have, the size of its cache shouldn’t be a deciding factor for you.

Most sellers don’t even list it as a feature since its effect is hardly noticeable compared to if it were a normal HDD.

The flash memory chips offer faster speeds in the first place so a cache on SSDs isn’t that important.

Do You Get Different Types of SSD Cache?

When looking into SSD caching, several options are available. Each has its own merits and demerits, and it always boils down to the kind of service you need.

Here, we’ll briefly highlight 3 different styles of SSD caching available for implementation. They are write-back, write-around, and write-through caching.

Write-Back Caching

In this mode of SSD caching, data is first written onto the SSD cache completely before being written to the main storage location.

Recall that caching is a step that allows your most frequently used apps and resources to be on virtual standby. This in itself is already an advantage.

Having the SSD cache have priority over the main storage during task executions improves system performance by even better margins. This feature, however, comes with a catch…

In the event of the cache failing, data loss could be significant. Remember in this mode, data is first written to the SSD cache.

If it fails before it has finished writing to the main storage location, the data will be lost.

As such, in implementing this mode of caching, precautions such as mirroring should be employed as well.

Write-Around Caching

In this mode, data is first directly written to the main storage location before hitting the cache.

This implies the SSD cache will need a sort of ‘warmup’ session as it gradually accumulates the data, and resources that are found to be frequently accessed.

Though this process may be slow, it has a profound advantage. Its methodology essentially guarantees that rarely used programs, data, and resources won’t fill valuable cache space.

Write-Through Caching

This is a relatively new mode of SSD caching that has quickly gained popularity.

It works by writing the data to the SSD cache and the main storage simultaneously. The data is also unavailable from the cache until it has been completely written into the main storage.

The speed of the initial writing operation can be slow in this mode.

Which SSD Have DRAM Cache?

DRAM cache is responsible for holding the ‘directions’ so to speak, to the resources found in an SSD.

That simple fact is a factor which has made SSDs one of the fastest storage options available.

That being said, recent technological trends have seen a shift in this view.

DRAM-less SSDs have slowly come about in the market. They are becoming popular because they are cheaper than ‘normal’ SSDs because of the omission of DRAM.

Though they still provide faster speeds than conventional HDDs, they can’t match the efficiency of an SSD with DRAM.

It comes as a relief that all SSDs come properly labeled on whether they don’t have a DRAM.

So check them out if you’re interested in upgrading to an SSD, as your preference to go with SSD with DRAM or DRAM-less will be based on affordability and performance.